At CES 2026, NVIDIA announced what might be the most significant open-source AI release to date. The company unveiled new models, datasets, and tools spanning everything from speech recognition to drug discovery.

The scope is remarkable:

- 10 trillion language training tokens

- 500,000 robotics trajectories

- 455,000 protein structures

- 100 terabytes of vehicle sensor data

Major companies including Bosch, Salesforce, Uber, Palantir, and CrowdStrike are already building on these technologies.

Nemotron RAG: Smarter Document Search

Embedding Model: Llama-Nemotron-Embed-VL-1B-V2 (1.7B parameters)

Reranking Model: Llama-Nemotron-Rerank-VL-1B-V2 (1.7B parameters)

Also Available: 8B parameter text-only embedding model

Context Length: Up to 8,192 tokens

License: Commercial use allowed

Finding information buried in documents is a daily struggle for knowledge workers. Nemotron RAG brings multimodal intelligence to document search, processing both text and images with accurate multilingual insights across 26 languages.

How It Works

The Nemotron RAG pipeline combines three components:

- Embedding Model: converts documents into vector representations for storage and retrieval

- Reranking Model: reranks potential candidates into final order using cross-attention

- Reasoning Model: generates accurate responses based on retrieved context

Real-World Example: IT Help Desk Agent

NVIDIA demonstrated how these models work together in an IT Help Desk agent:

- Nemotron Nano 9B V2: primary reasoning model for generating responses

- Llama 3.2 EmbedQA 1B V2: converts documents into vector embeddings

- Llama 3.2 RerankQA 1B V2: reranks retrieved documents for relevance

These models collectively enable the agent to answer user queries accurately by leveraging language generation, document retrieval, and reranking capabilities.

Who's Using It

Cadence models logic design assets such as micro-architecture documents, constraints, and verification collateral. Engineers can ask questions like "I want to extend the interrupt controller to support a low power state, show me which spec sections need changes" and instantly surface relevant requirements.

IBM is piloting these models to improve search and reasoning across technical documentation.

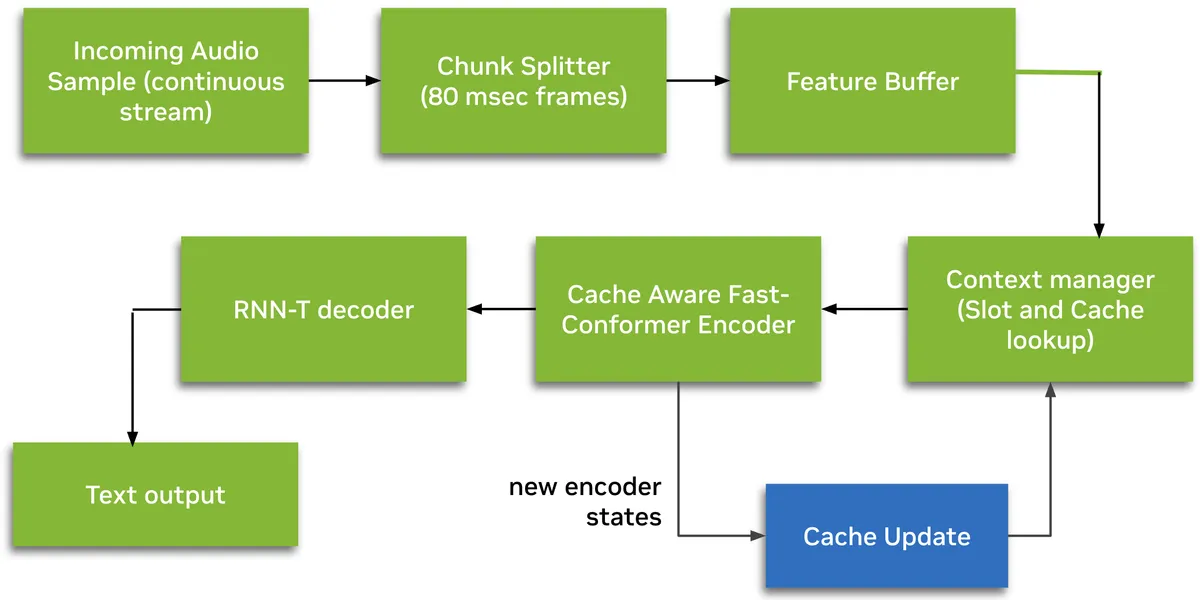

Nemotron Speech: Talk to Your Devices Like Never Before

Model: Nemotron-Speech-Streaming-En-0.6B

Parameters: 600M

Architecture: Cache-aware FastConformer encoder + RNN-T decoder

Latency: Sub-100ms streaming

License: Commercial use allowed

Nemotron Speech delivers real-time speech recognition that performs 10x faster than comparable models and tops current ASR leaderboards.

Key Features

- Cache-aware streaming architecture: processes only new audio chunks while reusing cached encoder context

- Runtime-configurable latency modes: 80ms, 160ms, 560ms, or 1.12s chunks without retraining

- Native punctuation and capitalization support

- Trained on 285,000 hours of audio data from the NVIDIA Granary dataset

Who's Using It

Bosch is already using Nemotron Speech to enable drivers to interact with vehicles through voice commands. ServiceNow trains its Apriel model family on Nemotron datasets for cost-efficient multimodal performance.

Expect this technology in smart home devices, customer service systems, and accessibility tools throughout 2026.

Clara: Faster Drug Discovery and Better Healthcare

La-Proteina: Atom-level protein design

ReaSyn v2: Drug synthesis feasibility

KERMT: Computational safety testing

RNAPro: RNA 3D shape prediction

Dataset: 455,000 synthetic protein structures

NVIDIA's new Clara AI models aim to bridge the gap between digital discovery and real-world medicine. While you won't interact with these models directly, they could significantly impact your healthcare.

Model Breakdown

| Model | Function | Impact |

|---|---|---|

| La-Proteina | Design large, atom-level-precise proteins | Study previously untreatable diseases |

| ReaSyn v2 | Incorporate synthesis feasibility into discovery | Prevent wasted research on impractical compounds |

| KERMT | Predict drug-body interactions | Catch problems before expensive clinical trials |

| RNAPro | Predict RNA 3D shapes | Enable personalized RNA-based therapeutics |

Bottom line: Treatments could reach patients faster and at lower cost.

Alpamayo: Making Self-Driving Cars Smarter

Model: Alpamayo-R1-10B

Parameters: 10 billion (8.2B Cosmos Reason backbone + 2.3B action expert)

Training Data: 1+ billion images from 80,000 hours of multi-camera driving

Dataset: 1,700+ hours of driving data from 25 countries

License: Non-commercial (research)

NVIDIA's new Alpamayo family will accelerate the path to truly autonomous vehicles. This is the industry's first open reasoning VLA model designed for autonomous driving.

Key Innovation: Chain-of-Thought Reasoning

Unlike traditional AV systems that just detect objects and plan paths, Alpamayo uses chain-of-thought reasoning. It can:

- Process video input from multiple cameras

- Generate driving trajectories

- Explain the logic behind each decision

Example output: "Nudge to the left to increase clearance from the construction cones encroaching into the lane"

What's Included

- Alpamayo 1: 10B reasoning VLA model on Hugging Face

- AlpaSim: open-source end-to-end simulation framework

- Physical AI Open Datasets: 1,700+ hours covering rare edge cases from 25 countries and 2,500+ cities

Who's Using It

Lucid Motors, JLR, Uber, and Berkeley DeepDrive are using Alpamayo to develop reasoning-based AV stacks for Level 4 autonomy.

Cosmos: Teaching Robots to Understand the Physical World

Cosmos Reason 2: 2B and 8B parameter versions

Context Window: 256K tokens (16x larger than v1)

Architecture: Based on Qwen3-VL

License: Commercial use allowed (NVIDIA Open Model License)

On Hugging Face, robotics has become the fastest-growing segment, with NVIDIA's models leading downloads.

Cosmos Model Family

| Model | Parameters | Function |

|---|---|---|

| Cosmos Reason 2 | 2B / 8B | Physical AI reasoning VLM for robots and AI agents |

| Cosmos Transfer 2.5 | - | Video-to-world style transfer |

| Cosmos Predict 2.5 | 2B / 14B | Future state prediction as video |

Key Features of Cosmos Reason 2

- Enhanced spatio-temporal understanding with timestamp precision

- 2D/3D point localization and bounding box coordinates

- Trajectory data output for robotic control

- OCR support for reading text in environments

- Chain-of-thought reasoning with

<think>tags

Isaac GR00T N1.6: Humanoid Robot Foundation Model

Parameters: 3B

Base VLM: Cosmos-Reason-2B variant

Architecture: VLA with 32-layer diffusion transformer

GR00T N1.6 is an open vision-language-action model purpose-built for humanoid robots. It unlocks full body control and uses Cosmos Reason for better contextual understanding.

Who's Using It

- Franka Robotics, Humanoid, and NEURA Robotics , simulate, train, and validate robot behaviors

- Salesforce, Hitachi, Uber, and VAST Data , traffic monitoring and workplace productivity

- Milestone , vision AI agents for public safety

Nemotron Safety: Building Trustworthy AI

Content Safety: Llama-3.1-Nemotron-Safety-Guard-8B-v3

PII Detection: Nemotron-PII (GLiNER-based)

License: Commercial use allowed

For businesses deploying AI, Nemotron Safety includes content safety models and PII detection with high accuracy.

Components

- Content Safety Model: expanded multilingual support with cultural nuance

- PII Detection: detects sensitive personal data before it leaks

- Topic Control: manages what topics the AI can discuss

Who's Using It

- CrowdStrike, Cohesity, and Fortinet: strengthen AI application security

- CodeRabbit: powers AI code reviews with improved speed and accuracy

- Palantir: integrating into Ontology framework for specialized AI agents

What This Means for Everyone

All models and data are available now on GitHub and Hugging Face, also as NVIDIA NIM microservices for scalable deployment.

Open Data Summary

| Dataset | Size | Content |

|---|---|---|

| Language tokens | 10 trillion | Multilingual reasoning, coding, safety |

| Robotics trajectories | 500,000 | Robot motion and manipulation |

| Protein structures | 455,000 | Synthetic structures for biomedical AI |

| Vehicle sensor data | 100 TB | Diverse driving conditions |

| Driving video | 1,700+ hours | Rare edge cases from 25 countries |

Links to Get Started

- Nemotron models: developer.nvidia.com/nemotron

- Cosmos models: github.com/nvidia-cosmos

- Alpamayo: developer.nvidia.com/drive/alpamayo

- Isaac GR00T: developer.nvidia.com/isaac/gr00t

For regular users, this release means better voice assistants, smarter document search, faster drug development, safer self-driving cars, and more capable robots. These technologies will filter into consumer products throughout 2026.

NVIDIA is betting that by enabling the entire AI ecosystem, they sell more GPUs. Based on the companies already adopting these technologies, that bet is paying off.