Forget everything you thought you knew about AI coding assistants. The Ralph Loop plugin for Claude Code isn't just another developer tool. It's the single most transformative advancement in AI-assisted development this year. And it solves the two problems that have plagued every AI coding workflow until now: context limits and human babysitting.

Traditional AI coding sessions hit a wall. You start strong, but as your project grows, the AI loses track of earlier decisions. You constantly re-explain. You copy-paste context. You babysit. The Ralph Loop shatters this pattern entirely. It runs autonomously for hours on end, maintaining coherent progress without ever running out of context, because each iteration starts fresh while building on tangible artifacts saved to your file system.

The outcomes speak volumes. One developer finished work worth $50,000 using only $297 in API fees. A team at Y Combinator woke up to find six fully functional repositories that the plugin had built while they slept. Someone even managed to create an entirely new programming language through three months of automated iteration.

This official Anthropic plugin takes its name from the Simpsons character Ralph Wiggum, famously dim but endlessly persistent. The plugin embraces that same philosophy: it's intentionally "dumb." No complex orchestration, no fancy state management. It simply runs the same command over and over until Claude actually finishes the job. And that simplicity is exactly what makes it powerful.

How it works

The underlying principle is remarkably straightforward. Rather than using Claude as a tool that responds once and waits for new instructions, this plugin establishes a continuous feedback cycle where the AI keeps refining its own output.

The process follows a simple pattern:

- you write a prompt once and it remains unchanged throughout

- whatever Claude builds gets saved to your file system

- your git history grows with each iteration

- on every pass, Claude examines what it previously created and improves upon it

- this continues until the work meets your defined success conditions

The technical implementation relies on intercepting exit signals. Every time Claude believes it has finished and attempts to stop, the plugin catches that signal and restarts the process with identical instructions. Only when Claude produces a specific completion phrase that you predetermined does the cycle actually end.

What makes it powerful?

The real magic happens when you pair this with automated testing. Claude creates tests that initially fail, writes code to make them pass, executes the test suite, identifies what broke, repairs it, and starts again. No human needs to intervene at any point.

Like its cartoon namesake, the plugin doesn't try to be clever. It just keeps showing up and trying again. Every mistake becomes useful information that guides the next attempt. Through repeated cycles, the output gradually improves until it actually works. Dumb persistence beats fragile intelligence.

Documented outcomes

People have achieved remarkable things with this approach:

- Contract work at a fraction of the cost: work that would typically require significant outsourcing budget was completed using minimal API expenses.

- Autonomous overnight development: teams have returned to their computers in the morning to find multiple complete projects waiting for them.

- Novel software creation: the plugin has even produced original programming languages that never existed in any training data.

Setting it up

Getting started requires just a few steps. Download the Claude Code repository from Anthropic's GitHub, move the plugin folder into your local Claude configuration directory, and restart the application.

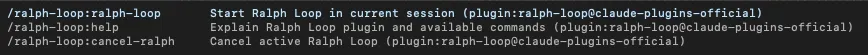

Two commands become available after installation. One initiates the loop, the other terminates it.

Your command structure will look something like:

/ralph-loop:ralph-loop "Create a REST API supporting basic operations with full test coverage and documentation. Output <promise>DONE</promise> when tests succeed." --completion-promise "DONE" --max-iterations 50

Setting an iteration cap is essential. Without this boundary, an imprecise prompt might cause endless cycling.

Appropriate applications

This approach excels in specific scenarios:

- projects with explicit, verifiable goals

- new codebases where the AI can work independently

- development workflows centered on passing automated tests

- any situation where machines can validate the output

Skip this tool when human evaluation is necessary, when you need a single quick change, or when you cannot clearly articulate what success looks like.

Crafting effective instructions

Your results depend entirely on how well you communicate with the system. Specify exactly what completion means in measurable terms. Explain how the AI should check its own work. Provide guidance for situations where progress stalls.

Weak instruction: "Make me a task management API that works well."

Strong instruction: "Create a task management API with standard database operations, proper error handling, minimum 80% test coverage, and usage documentation. Execute tests after every modification. Signal FINISHED once every test passes."

Looking forward

This plugin signals a meaningful evolution in AI development tools. The industry is transitioning from technology that impresses in demonstrations to technology that delivers actual business value. Several organizations have already replaced external contractors with this automated approach for starting new projects.

What matters most is how skillfully you direct the process. Having access to a capable AI model is not enough on its own. Writing precise instructions with unambiguous success metrics makes the difference between wasted cycles and genuine productivity.

For those prepared to master the art of prompt design, this unassuming plugin opens doors to development workflows that seemed impossible just recently.